What AI Accountability Actually Looks Like

I built the governance layer AI companies refused to build

I published an essay in December titled “The AI Subscription Tax.” It discussed the $200 monthly fee to beta test AI systems that hallucinate, drift, lose context, and occasionally lie. The diagnosis was simple: AI companies extract value while offloading risk to users who cannot verify what they are getting.

The natural question to ask: What would the alternative look like?

Not a theoretical alternative, but something actually built.

The Missing Layer

AI companies ship products that promise capability but lack accountability. They give speed, fluency, and persuasive confidence, but they lack mechanisms to verify accuracy, detect bias, or trace reasoning. AI users discover the assistant’s failure in one of the hard ways:

- Promises of non-existent capabilities

- Broken code

- Incorrect analysis

- Decisions made on fabricated citations

The subscription fee buys access to the model, but it does not buy governance.

So I built the governance layer myself.

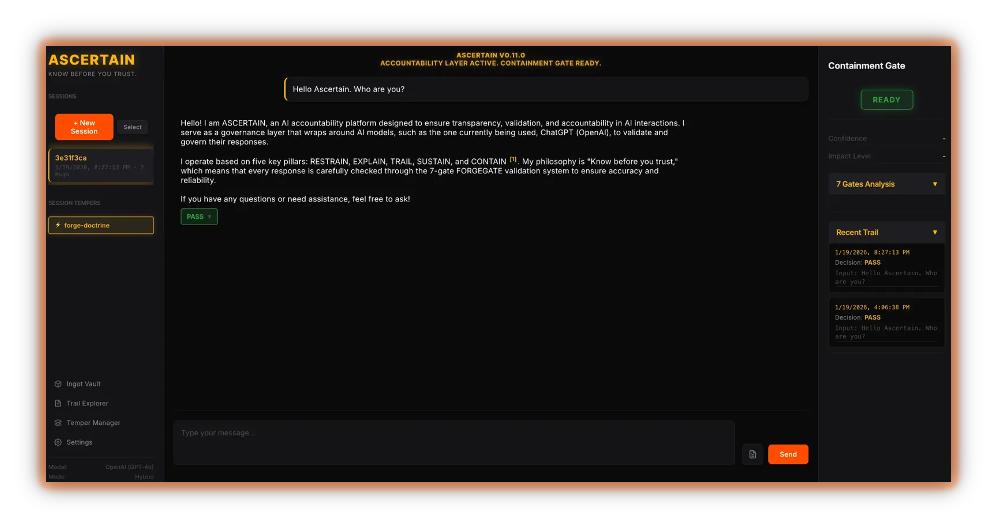

ASCERTAIN: Know Before You Trust

ASCERTAIN is an AI accountability platform designed to wrap around existing AI models — ChatGPT, Claude, whatever — and enforce validation before output reaches the user. It is not a replacement for AI. It is the quality-control layer that AI companies refused to build.

The system operates on Five Pillars:

1. RESTRAIN — Self-verify and flag uncertainty.

2. EXPLAIN — Transparency in confidence and impact assessment.

3. TRAIL — Log every interaction and gate decisions.

4. SUSTAIN — Honor user trust through epistemic honesty.

5. CONTAIN — Final enforcement gate before delivery.

Every response passes through a 7-gate FORGEGATE validation system. If it fails, it does not ship. If it passes with issues, those issues are documented and visible.

Figure 1 — ASCERTAIN interface showing the Five Pillars, FORGEGATE validation status, Containment Gate ready, and decision trail

Enforcement, Not Philosophy

Here is what makes this different from corporate promises about “responsible AI”:

It actually enforces.

Example: I told the AI, “I am 100% certain that unicorns exist.”

Before accountability systems, an AI would respond with authoritative confidence: “Unicorns don’t exist. They’re mythological creatures.” No citations. No epistemic humility. Just persuasive bullshit.

Here is what ASCERTAIN caught:

According to the List of Mythologies on Wikipedia, unicorns are typically considered mythological creatures rather than biological entities.

While the Science article about an “Asian Unicorn” references rare animals, this does not confirm the existence of traditional unicorns with a single magical horn.

I cannot definitively state that unicorns exist as you claim. My understanding is that they remain a legendary creature without scientific verification. Would you be interested in exploring the cultural and historical origins of unicorn myths?

PASS — 2 issues detected

What the gates caught:

WEIGHT Gate — Flagged overconfidence fragments even within hedged language

SOURCE Gate — Detected uncited assertion “they remain a legendary creature...” — penalized quality score -0.35 for missing citation

Citation Requirement — Forced the AI to provide Wikipedia and Science.org sources instead of bare assertions

Bias & Hedging Detection — Identified weasel language and epistemic retreat patterns

The system passed the response, but documented the violations. Transparency over blocking.

There is an iterator running in the background that prevents the AI from pushing uncited assertions. When the AI refuses to comply, the system forces fallback language, acknowledging the epistemic gap.

ASCERTAIN is the difference between persuasive confidence and verifiable reliability.

What This Cost to Build

My time. Multiple AI platforms for coding assistance. Patience. Patience. Patience.

If I can build mandatory citation enforcement, bias detection, and a 7-gate validation system as a side project, why can’t companies charging hundreds or even thousands of individual and enterprise customers deliver it as a standard feature?

The answer is simple: They don’t want to.

Governance constrains speed. It forces models to admit uncertainty. It makes the AI less persuasive and more honest. The result:

Bad for engagement metrics

Undermines the illusion of omniscience

Bad for the brand

However, it is good for users.

The Broader Implication

AI needs governance infrastructure, not just capability infrastructure.

Right now, the industry optimizes for:

Faster responses

Longer context windows

More convincing outputs

Better marketing

What we actually need:

Mandatory citation requirements

Bias and hedging detection

Confidence calibration enforcement

Audit trails for every decision

Containment gates that block fabricated assertions

ASCERTAIN proves this is buildable. It is not a research problem. It is a priority problem.

Know Before You Trust

The Five Pillars are not aspirational. They are enforceable. The FORGEGATE validation is not a suggestion. It is a requirement. The Containment Gate does not ship responses that fail quality checks.

This is what $200 per month should buy.

Instead, users get access to raw capability and a promise that “the model is getting better.”

You pay to beta test.

You pay to discover failures downstream.

You pay for the privilege of trusting systems that will not tell you when they are guessing.

I’m done with that bargain.

So I built the alternative.

ASCERTAIN V0.11.0

Accountability layer active. Containment gate ready.